| GISdevelopment.net ---> AARS ---> ACRS 2000 ---> Image Processing |

Comparison Of Two Texture

Features For Multispectral Imagery Analysis

Pornphan DULYAKARN, Yuttapong RANGSANSERI, and Punya THITIMAJSHIMA

Department of Telecommunications Engineering, Faculty of Engineering,

King Mongkut's Institute of Technology Ladkrabang, Bangkok, 10520,THAILAND

Tel: (66-2)-326-9967 Fax: (66-2)-326-9086

E-mail:doll_dulya@hotmail.com,kryuttha@kmitl.ac.th ,

ktpunya@kmitl.ac.th

Pornphan DULYAKARN, Yuttapong RANGSANSERI, and Punya THITIMAJSHIMA

Department of Telecommunications Engineering, Faculty of Engineering,

King Mongkut's Institute of Technology Ladkrabang, Bangkok, 10520,THAILAND

Tel: (66-2)-326-9967 Fax: (66-2)-326-9086

E-mail:doll_dulya@hotmail.com,kryuttha@kmitl.ac.th ,

ktpunya@kmitl.ac.th

Keywords: Gray-level Co-occurrence Matrix,

Fourier Transform, Texture Analysis Neural Network, Multispectral Image

Abstract

Two feature extraction methods, gray-level co-occurrence matrix and Fourier transform, are compared for land cover classification, which is viewed as texture of the image. Comparing results between these two texture features show that feature derived from the gray-level co-occurrence matrix give the better result than Fourier transform. With these features, supervised classification is carried out by the multi-layer perceptron (MLP) neural network using the back-propagation (BP) algorithm

1. Introduction

Texture is one of the most important defining characteristics of an image. It is characterized by the spatial distribution of gray levels in a neighborhood (Jain et al., 1995). In order to capture the spatial dependence of gray-level values which contribute to the perception of texture, a two-dimensional dependence texture analysis matrix are discussed for texture consideration. Since texture shows its characteristics by both each pixel and pixel values. There are many approaches using for texture classification. The gray-level co-occurrence matrix seems to be a well-know statistical technique for feature extraction. However, there is a different statistical technique using the absolute differences between pairs of gray levels in an image segment that is the classification measures from the Fourier spectrum of image segments.

Texture features derived from gray-level co-occurrence matrix and Fourier transform are used to be input data for unsupervised classification. By this experiment, multilayer perceptron (MLP) neural network using the back-propagation (BP) algorithm is chosen to be the classification algorithm.

2. Texture Features

Feature extractions acquired by this experiment are derived from the two methods. That are gray-level co-occurrence matrix and Fourier transform, which are the kinds of statistical methods. The more details of these texture analyses are shown by the following subheadings.

2.1 Gray-level Co-occurrence Matrix

Gray-level co-occurrence matrix is the two dimensional matrix of joint probabilities Pd,r(i,j) between pairs of pixels, separated by a distance, d, in a given direction, r. It is popular in texture description and based on the repeated occurrence of some gray level configuration in the texture; this configuration varies rapidly with distance in fine textures, slowly in coarse textures (Haralick et al., 1973).

Finding texture features from gray-level co-occurrence matrix for texture classification in this experiment are based on these criteria:

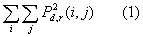

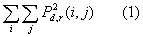

Energy:

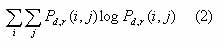

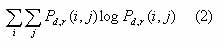

Entropy:

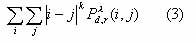

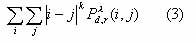

Contrast: (typically k = 2, l = 1)

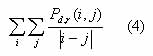

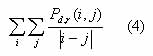

Homogeneity:

2.2 Fourier Transform

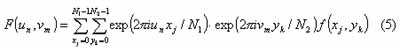

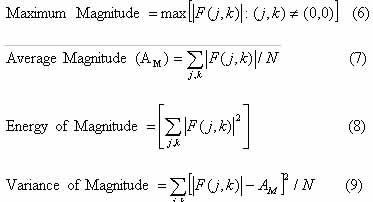

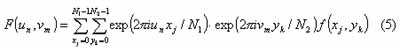

This classification measures from the Fourier spectrum of image segments require the calculation of the fast Fourier transform (FFT) for each segment and the definition of features in terms of the amplitudes of the spatial frequencies. The discrete Fourier transform F(un,vm) of a digitized image segment f(xj,yk) of size N1´N2 is defined by

where un and vm are the discrete spatial frequencies

xj and yk are pixel positions

The set of features based on the power spectrum consists of four statistical measures. If |F(j,k)| is the matrix containing the amplitudes of the spectrum and N is the number of frequency components then these measures are given by (Augusteijn et al. 1995)

3. Neural Network Classification

Multi-layer perceptrons are feed-forward networks with one or more layers of nodes between the input and output nodes. These additional layers contain hidden units or nodes that are not directly connected to both the input and output nodes. The capabilities of multi-layer perceptrons stem from the nonlinearities used within nodes. The behavior of a multi-layer network with nonlinear units is complex. A multi-layer network can be trained with a back-propagation learning algorithm (Rumelhart et al., 1986). Learning via back-propagation involves the presentation of pairs of input and output vectors. The actual output for a given vector is compared with the desired or target output. If there is no difference, no weights are changed; otherwise, the weights are adjusted to reduce the difference. This learning method essentially uses a gradient search technique to minimize the cost function that is equal to the mean square difference between the desired and actual outputs. The network is initialized by setting random weights and thresholds, and the weights are updated with each iteration to minimize the mean squared error.

The back-propagation training algorithm is an iterative gradient algorithm designed to minimize the mean square error between the actual output of a multi-layer feed-forward perceptron and the desired output. It requires continuous differentiable non-linearities. (Dulyakarn et al., 2000).

4. Experimental Results

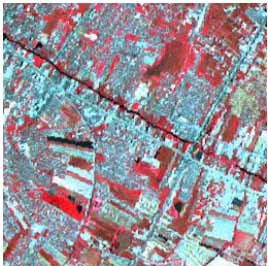

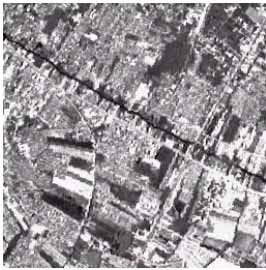

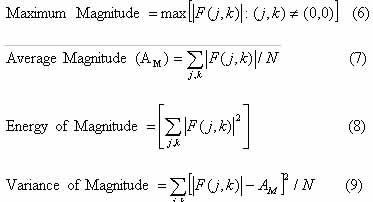

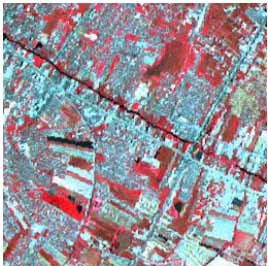

The multispectral image used in this experiment is ADEOS, which composes of 3 bands in the size of 256´256 pixels. This tested area, in Figure 1(a), is a region of Bangkok, Thailand. This image must be removed or decorrelates an interband correlation before using this data to be an input data by using Karhunen Loève Transform (KLT) (Dulyakarn et al., 1999). The transformed image is shown in Figure 1(b). Using the two textural analyses, gray-level co-occurrence matrix and Fourier transform, by giving the detail in the above subheading we can calculate two texture features. The supervised classification, multi-layer perceptron using back-propagation algorithm, is applied for classifying in this research. The classification results considered from two texture features are compared and shown in Table 1. The classified images can be compared by noticing from Table 1 and concluded that the texture feature using gray-level co-occurrence matrix give the result better than Fourier transform.

(a)

(b)

Figure 1 The ADEOS acquired from the region of Bangkok, Thailand.

(a) An original multispectral image.

(b) The first principal component image resulted from KLT.

Table 1 Classification results.

5. Conclusion

A comparative study between two texture features derived from gray-level co-occurrence matrix and Fourier transform has been proposed. The experimental results displayed in percentage of correct classifications make the evidence that the texture analysis using gray-level co-occurrence matrix method provides the better result than texture feature derived from Fourier transform.

Acknowledgement

The authors wish to thank the National Research council of Thailand (NRCT) for providing the satellite image data.

References

Abstract

Two feature extraction methods, gray-level co-occurrence matrix and Fourier transform, are compared for land cover classification, which is viewed as texture of the image. Comparing results between these two texture features show that feature derived from the gray-level co-occurrence matrix give the better result than Fourier transform. With these features, supervised classification is carried out by the multi-layer perceptron (MLP) neural network using the back-propagation (BP) algorithm

1. Introduction

Texture is one of the most important defining characteristics of an image. It is characterized by the spatial distribution of gray levels in a neighborhood (Jain et al., 1995). In order to capture the spatial dependence of gray-level values which contribute to the perception of texture, a two-dimensional dependence texture analysis matrix are discussed for texture consideration. Since texture shows its characteristics by both each pixel and pixel values. There are many approaches using for texture classification. The gray-level co-occurrence matrix seems to be a well-know statistical technique for feature extraction. However, there is a different statistical technique using the absolute differences between pairs of gray levels in an image segment that is the classification measures from the Fourier spectrum of image segments.

Texture features derived from gray-level co-occurrence matrix and Fourier transform are used to be input data for unsupervised classification. By this experiment, multilayer perceptron (MLP) neural network using the back-propagation (BP) algorithm is chosen to be the classification algorithm.

2. Texture Features

Feature extractions acquired by this experiment are derived from the two methods. That are gray-level co-occurrence matrix and Fourier transform, which are the kinds of statistical methods. The more details of these texture analyses are shown by the following subheadings.

2.1 Gray-level Co-occurrence Matrix

Gray-level co-occurrence matrix is the two dimensional matrix of joint probabilities Pd,r(i,j) between pairs of pixels, separated by a distance, d, in a given direction, r. It is popular in texture description and based on the repeated occurrence of some gray level configuration in the texture; this configuration varies rapidly with distance in fine textures, slowly in coarse textures (Haralick et al., 1973).

Finding texture features from gray-level co-occurrence matrix for texture classification in this experiment are based on these criteria:

Energy:

Entropy:

Contrast: (typically k = 2, l = 1)

Homogeneity:

2.2 Fourier Transform

This classification measures from the Fourier spectrum of image segments require the calculation of the fast Fourier transform (FFT) for each segment and the definition of features in terms of the amplitudes of the spatial frequencies. The discrete Fourier transform F(un,vm) of a digitized image segment f(xj,yk) of size N1´N2 is defined by

where un and vm are the discrete spatial frequencies

xj and yk are pixel positions

The set of features based on the power spectrum consists of four statistical measures. If |F(j,k)| is the matrix containing the amplitudes of the spectrum and N is the number of frequency components then these measures are given by (Augusteijn et al. 1995)

3. Neural Network Classification

Multi-layer perceptrons are feed-forward networks with one or more layers of nodes between the input and output nodes. These additional layers contain hidden units or nodes that are not directly connected to both the input and output nodes. The capabilities of multi-layer perceptrons stem from the nonlinearities used within nodes. The behavior of a multi-layer network with nonlinear units is complex. A multi-layer network can be trained with a back-propagation learning algorithm (Rumelhart et al., 1986). Learning via back-propagation involves the presentation of pairs of input and output vectors. The actual output for a given vector is compared with the desired or target output. If there is no difference, no weights are changed; otherwise, the weights are adjusted to reduce the difference. This learning method essentially uses a gradient search technique to minimize the cost function that is equal to the mean square difference between the desired and actual outputs. The network is initialized by setting random weights and thresholds, and the weights are updated with each iteration to minimize the mean squared error.

The back-propagation training algorithm is an iterative gradient algorithm designed to minimize the mean square error between the actual output of a multi-layer feed-forward perceptron and the desired output. It requires continuous differentiable non-linearities. (Dulyakarn et al., 2000).

4. Experimental Results

The multispectral image used in this experiment is ADEOS, which composes of 3 bands in the size of 256´256 pixels. This tested area, in Figure 1(a), is a region of Bangkok, Thailand. This image must be removed or decorrelates an interband correlation before using this data to be an input data by using Karhunen Loève Transform (KLT) (Dulyakarn et al., 1999). The transformed image is shown in Figure 1(b). Using the two textural analyses, gray-level co-occurrence matrix and Fourier transform, by giving the detail in the above subheading we can calculate two texture features. The supervised classification, multi-layer perceptron using back-propagation algorithm, is applied for classifying in this research. The classification results considered from two texture features are compared and shown in Table 1. The classified images can be compared by noticing from Table 1 and concluded that the texture feature using gray-level co-occurrence matrix give the result better than Fourier transform.

(a)

(b)

Figure 1 The ADEOS acquired from the region of Bangkok, Thailand.

(a) An original multispectral image.

(b) The first principal component image resulted from KLT.

Table 1 Classification results.

| Class | Number of Tested Pixels | Gray-level Co-occurrence Matrix (%) | Fourier Transform (%) |

| 1 | 1273 | 85.53 | 81.34 |

| 2 | 773 | 76.45 | 74.16 |

| 3 | 782 | 70.47 | 67.58 |

5. Conclusion

A comparative study between two texture features derived from gray-level co-occurrence matrix and Fourier transform has been proposed. The experimental results displayed in percentage of correct classifications make the evidence that the texture analysis using gray-level co-occurrence matrix method provides the better result than texture feature derived from Fourier transform.

Acknowledgement

The authors wish to thank the National Research council of Thailand (NRCT) for providing the satellite image data.

References

- Augusteijn, M. F., Clemens, L. E., and Shaw, K. A., 1995. Performance evaluation of texture measures for ground cover identification in satellite images by means of a neural network classifier. IEEE Trans. Geoscience and Remote Sensing, 33(3), pp. 616-626.

- Dulyakarn, P., Rangsanseri, Y., and Thitimajshima, P., 1999. Segmentation of multispectral images based on multithresholding. In: 2nd International Symposium on Operationalization of Remote Sensing, The Netherlands.

- Dulyakarn, P., Rangsanseri, Y., and Thitimajshima, P., 2000. Textural classification of urban environment using gray-level co-occurrence matrix approach. In 2nd International Conference on Earth Observation and Environmental Information, Cairo, Egypt.

- Haralick, R. M., Shanmugam, K., and Dinstein, I., 1973. Textural features for image classification. IEEE Trans. Syst., Man, Cybern., SMC-3, pp. 610-621.

- Jain, R., Kasturi, R., and Schunck, B. G., 1995. Machine Vision. Mc Graw-Hill International Editions, pp. 234-239.

- Sonka, M., Hlavac, V., and Boyle, R., 1993. Image Processing,

Analysis, and Machine Vision. Chapman & Hall Inc., pp. 482-485.