| GISdevelopment.net ---> AARS ---> ACRS 1997 ---> GIS |

Development of New User

Interface for 3D GIS using Mobile Terminal

Akira Takuma, Ryosuke

Shibasaki and Minoru Fujii

Institute of Industrial Science, University of Tokyo

7-22-1, Roppongi, Minato-ku, Tokyo, 106 Japan

Tel: ++81-3-3402-6231 Fax L ++81-3-3408-8268

E-mail :takuma@shunji.iis.u.-tokyo.ac.jp

Abstract

Institute of Industrial Science, University of Tokyo

7-22-1, Roppongi, Minato-ku, Tokyo, 106 Japan

Tel: ++81-3-3402-6231 Fax L ++81-3-3408-8268

E-mail :takuma@shunji.iis.u.-tokyo.ac.jp

The authors developed a new user interface for 3D GIS, which allow users to retrieve necessarily information from the database easily by only clicking "REAL" objects in live video image. At first we developed the "Fixed-position" system using a video camera, a workstation with 3D graphic library and 3D GIS database to check the relevance of our idea. Then, we are evolving to the development of a "Mobile" system using mobile terminal. This terminal consists of a personal computer, a CCD video camera with Differential GPS (to obtain location data) and Fiber Optical Gyroscope (to obtain angle data) and 3D GIS database. In the future, this system will be useful for "personal navigation" system, disaster investigation, updating GIS database, daily inspection of infrastructures, information guide system and so forth.

Introduction

This research proposes a new user interface for 3-Dimensional GIS which enable us to retrieve information of an object in "Real World" (Not in Cyber World) only by pointing it through a live video image. This concept is an application of "Augmented Reality (AR)" which is now widely noticed in computer vision society. AR enables "fusing" contents of a database with the "Real World" by embedding objects in the spatial database into a live video image. In this sense, AR is closer to human sense than Virtual Reality.

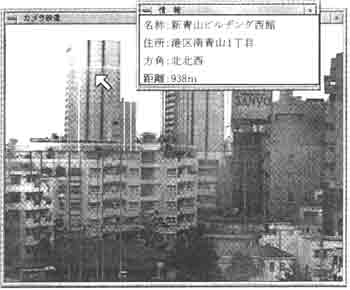

Based on the development of a "Fixed-position" system to validate the relevance of the concept of this user interface (See the Reference 2). Development of a "Mobile" system is now being conducted. These are systems which allow a user to click an object in a real image on the computer display to retrieve the information (attribute data) of the object (See [Fig-1]).

[Fig-1] The result of using "Fixed-position" system (Name, address, direction and distance form the viewpoint is shown in Japanese character.)

These systems have the underlying processes as follows:

- Capture a real image form a user's viewpoint through a video camera.

- Create a CG image of a view at the same place from the 3D spatial data, so that the CG image can be exactly overlaid to the video image. Exact overlay of the video image with the CG can be achieved by using location data and view angle data of the vide camera.

- With the exact overlay, each object in the video image is linked with the corresponding object in CG image.

- The user can point an object (e.g. building) in the video image by a mouse pointer to retrieve information of the corresponding object in the CG image.

In this paper, we will report the result of development of "Fixed-position" system, the process of development of "Mobile" system, and the improvement plan for the future.

Development of "Fixed-position" system

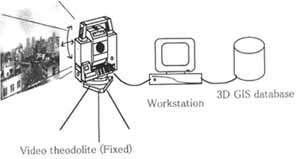

As the first step, the authors developed a "Fixed-position System". The structure of this system is shown in [Fig-2]. It is composed of a workstation, with graphics library and GIS database (3D location data of building in some part of Tokyo area), and a video theodolite (fixed at a certain position) for capturing real video images.

[Fig-2] Structure of "Fixed-position" system

The functions of this systems are as follows;

- The video image and the CG images are overlaid almost exactly using the position and viewing angle of the video theodolite. Users can retrieve the information (name, address and distance from the video position) of the building pointed by clicking the "REAL" building on the display, (see [Fig-3])

- In addition a building users are searching for can be shown (zoomed in by a video camera) on the display by inputting the name. An example output of this system is already shown in [Fig-1].

- The video camera is fixed at a specific location and the applicability is limited.

- Generation of CG image takes relatively long time, due to the large size of 3D data.

- The elevation data in the database has only limited accuracy, and the overlaying can not be so exact.

Development of "Mobile" system

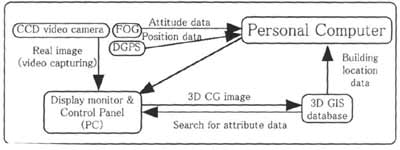

The configuration of the "Mobile" system is shown in [Fig-3]

[Fig-3] Structure of "Mobile-type" system

(All the parts should be put in the mobile terminal)

This "Mobile system an have much more variety of application, because users can retrieve necessary information whenever and whenever they want on the real-time basis. This function is enabled through obtaining position and attitude data of the video camera u sing DGPS (Differential GPS) and FOG (Fiber Optical Gyroscope) in real time, and fast drawing of CG image. However, the function of searching an object form its name on the display is deleted because this system has no keyboard as an input device at this moment.

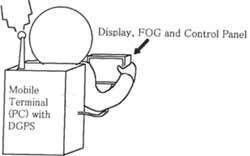

The image picture of the mobile terminal we will use is shown in [Fig-4]. The user carry the terminal on his back, and hold a small display and control panel (with a mouse pointer).

[Fig-4] Image Picture of using "Mobile" system

So far, the software on the workstation in "Fixed-position System" is already transported to Desktop-type PC, and 3D CG is drawn using the data from GIS database, DGPS and FOG. The problems we anticipate are as follows:

- In urban areas, where we are going to use this system, enough signals from GPS satellites may not reach to the terminal, which degrade to locational accuracy.

- Overlaying of the images are not so exact as we expected, due to the limited accuracy of original elevation data.

- Drawing CG images should be made more efficient. Otherwise it needs expensive, high-speeded CPU.

Future Perspective

This system will be improved in the following directions ,

- Data input device will be improved by introduction Pen-input-type device.

- Down-sizing of the mobile terminal and advancement of the CPU and the hard disk capacity.

- Addition of network function using PHS (Personal Handy-phone System), which has very high speed of data transporting (32 KB/sec). It can make it possible to access to the information in fixed large-capacity server system.

- Using HMD (Head Mounted Display, See [Fig-5] and broaden the ways of using this system.

[Fig-5] Image Picture of using HMD (Head Mounted Display)

This system will be useful in the following fields;

- Personal navigation and information guide system (with HMD, we can walk along the street without any signs)

- Investigating after disaster (we can retrieve the past information of destructed buildings at the site)

- Simulation of landscape (it is already common in CAD systems, but with this system we need not prepare the data of photograph texture data by ourselves)

- Daily inspection of structures (we can retrieve the past record of inspection at the site overlaying with the real time image).

- Night-time inspection of underground structures (we can see 3D image of complicated gas, water and sewage pipes from the above-ground even at night)

- Updating of GIS Database (information of the land ownership and the buildings is easily obtained and updated at that site)

We re deeply grateful to the cooperation of Zenrin Co., Ltd for the providing the building data of the test site.

Reference

- Takahiro Kawamura, Junichi Tatemura, Massao Sakauchi

"An Augmented Reality System using Landmarks from Realtime Video Image" - Minoru Fujii, Ryosuke Shibasaki, Junichi Tatemura

"Development of Experimental GIS User Interface by Fusing Real Urban Landscape Image and 3-Dimensional Spatial Data" (in Japanese) - Katashi Nagao

"Agent Augmented Reality: Integration Reality: Integration of Real World and Information Worlds via Software Agents" - M. Tani, K. Yamaashi, K. Tanikoshi, M. Futakawa, S.

Tanifuji

"Object -oriented Video: Interaction with Real-World Objects through Live Video" Chi' 92 May 3-7, 1992 pp. 593-598